Comparing Deep Learning Cameras with Smart Cameras

Introduction

Deep learning is a new technology which is revolutionizing existing applications and driving the development of new industries. The availability of tools from Google, Amazon, Intel, and Nvidia for creating and training neural networks is making the technology more accessible, and empowering new players to enter established markets with competitive products.

The potential of deep learning is widely acknowledged. You may be working on leveraging deep learning for your application right now. At Teledyne FLIR, we’ve also thought of ways to enable machine vision developers to take advantage of this technology. The result is our Firefly® DL camera with Teledyne FLIR Neuro technology, which provides an easy path to deploying trained networks in the field. The Firefly DL marries machine vision with deep learning inference by integrating a high-quality Sony Pregius image sensor and Teledyne FLIR Neuro technology which allows users to deploy their trained neural network directly onto the camera's VPU.

What is a VPU?

The Intel Movidius Myriad 2 Vision Processing Unit (VPU) is a new class of processor. A VPU combines high-speed hardware image processing filters, general-purpose CPU cores, and parallel vector processing cores. The vector cores used to accelerate on-camera inference are more optimized for the branching logic of neural networks than the general-purpose cores found in GPUs. This greater degree of optimization enables the VPU to achieve a high level of performance while using little power.

What is Inference?

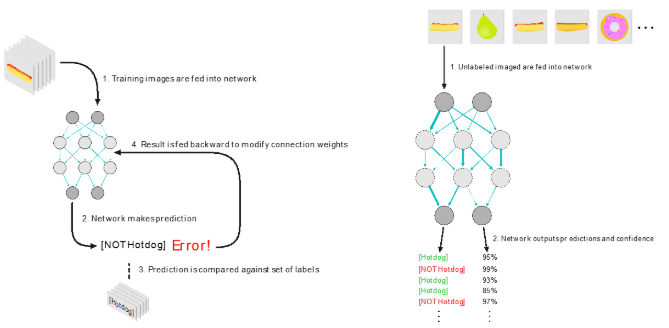

Inference is the application of deep learning on newly captured, unlabelled, real-world data. Inference is the result of a trained neural network making predictions based on new data.

Fig. 1. Inference is the application of a model trained with labeled data (A) to new, unlabelled data (B).

While there are many different types of networks that can be used for inference, MobileNets are extremely well suited to performing image classification. MobileNet was originally designed by Google to perform high-accuracy image classification and segmentation on mobile devices. It provides similar accuracy to much more computationally expensive networks which require large, power-hungry GPUs.

How does an inference camera compare to a “smart camera”?

Traditional smart cameras combine a machine vision camera and a single-board computer running rules-based image processing software. Smart cameras are a great solution for simple problems like barcode reading or answering questions like “On this part, is the hole where it’s supposed to be?” Inference cameras excel at more complex or subjective questions like “Is this an export-grade apple?” When trained using known good images, inference cameras can easily identify unexpected defects that would not be recognized by rules-based inspection systems, making them far more tolerant to variability.

Inference cameras can be used to augment existing applications with rich, descriptive metadata. Using GenICam chunk data, the Firefly DL can use inference to tag images which are passed to a host that carries out traditional rules-based image processing. In this way, users can quickly expand the capabilities of their existing vision systems. This hybrid system architecture can also be used to trigger a traditional vision system.

Using the Firefly DL translates into significant space savings, as the computing hardware used in traditional smart cameras is less power efficient and much larger than the VPU in the Firefly DL. At just 27 mm x 27 mm, the Firefly DL is ready for integration into tight spaces.

The Teledyne FLIR Neuro technology provides an open platform supporting both Tensorflow and Caffe. This gives users the flexibility to take advantage of easily accessible and the most advanced frameworks. In contrast, smart cameras are programmed using proprietary tools that may lag the most recent advances.

What are the advantages of inference on-camera?

Enabling inference on the edge of a vision system delivers improvements in system speed, reliability, power efficiency, and security.

- Speed: Inference on the edge, like other forms of edge computing, moves image processing away from a central server and close to your data’s source. Rather than transmitting whole images to a remote server, only descriptive data needs to be sent. This greatly reduces the amount of data that a system must transmit, minimizing network bandwidth and system latency.

- Reliability: For certain applications, the Firefly DL can eliminate dependence on server and network infrastructure, increasing its reliability. With its built-in VPU, the Firefly DL operates as a stand-alone sensor. It can capture images and make decisions based on them, then trigger actions using GPIO signalling.

- Power Efficiency:Triggering a vision system only when needed means more processing time can be spent on traditional rules-based image processing and analysis. Deep leaning inference can be used to trigger high-power image analysis when specific conditions are met. The Myriad 2 VPU delivers additional power savings by supporting cascaded networks. This enables multiple tiers of analysis, with more complex, higher-powered networks being called only if they meet the conditions of the previous network.

- Security:The small amount of data that is transmitted is easily encrypted, improving system security.

How do I get started?

The Firefly DL camera provides an easy path for taking deep learning from R&D to working on your application. It is ready to function as a stand-alone sensor, capturing images and making decisions based on them that trigger GPIO actions.

A complete inference on the edge vision system can be built for less than $500 with the Firefly DL together with the Intel OpenVINO tooklit, see our article, "How to build your first deep learning classification system".

Conclusion

Deep learning inference will fundamentally change the way vision systems are designed and programmed. It will enable complex and subjective decisions to be made more quickly and accurately than would be possible using traditional rules-based approaches. The Firefly DL marries machine vision with deep learning by combining a Sony Pregius sensor, GenICam interface, and the power for deep learning. This new class of Inference camera provides the ideal path to deploying deep learning inference in machine vision applications.