Streaming 4x Cameras with Small Carrier Board: Fast Prototype

Embedded vision components are popular and are incorporated into a plethora of applications. What all these applications have in common is the need to pack more and more functionality into tight spaces. Often, it is also very advantageous for these systems to make decisions on the edge. To enable such systems, including the ability to prototype quickly, Teledyne FLIR has introduced the Quartet™ Embedded Solution for TX2. This customized carrier board enables easy integration of up to 4 x USB3 machine vision cameras at full bandwidth. It includes the NVidia Jetson deep learning hardware accelerator and comes pre-integrated with Teledyne FLIR’s Spinnaker® SDK. Often, it is also very advantageous for these systems to make decision on the edge especially in inspection, mobile robotics, traffic systems, and various types of unmanned vehicles.

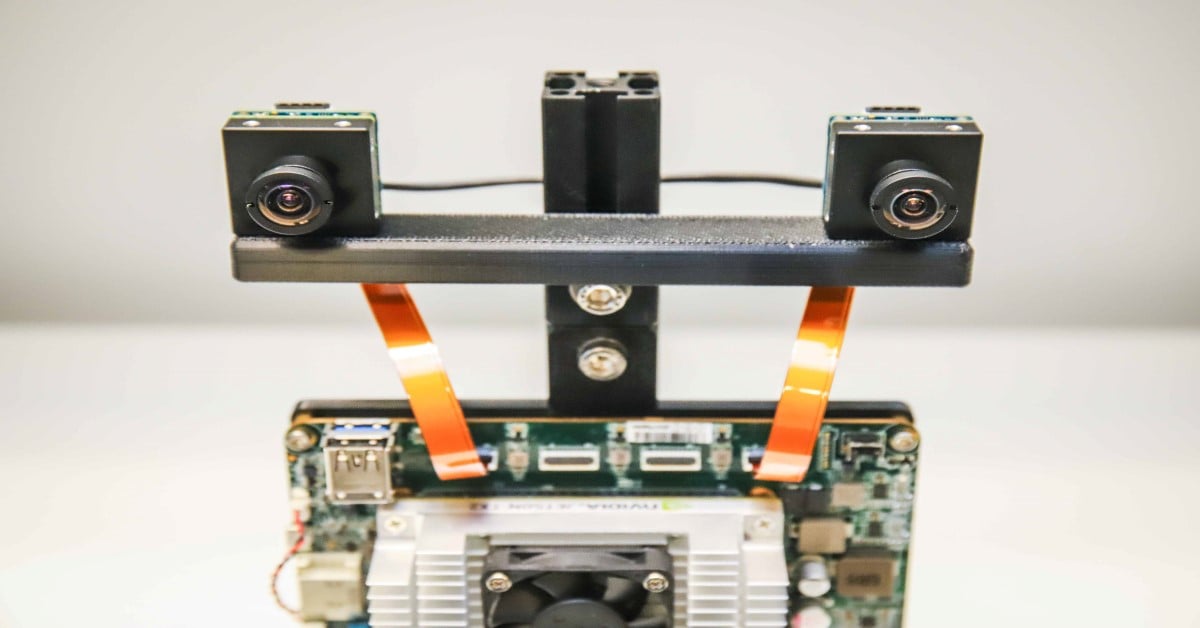

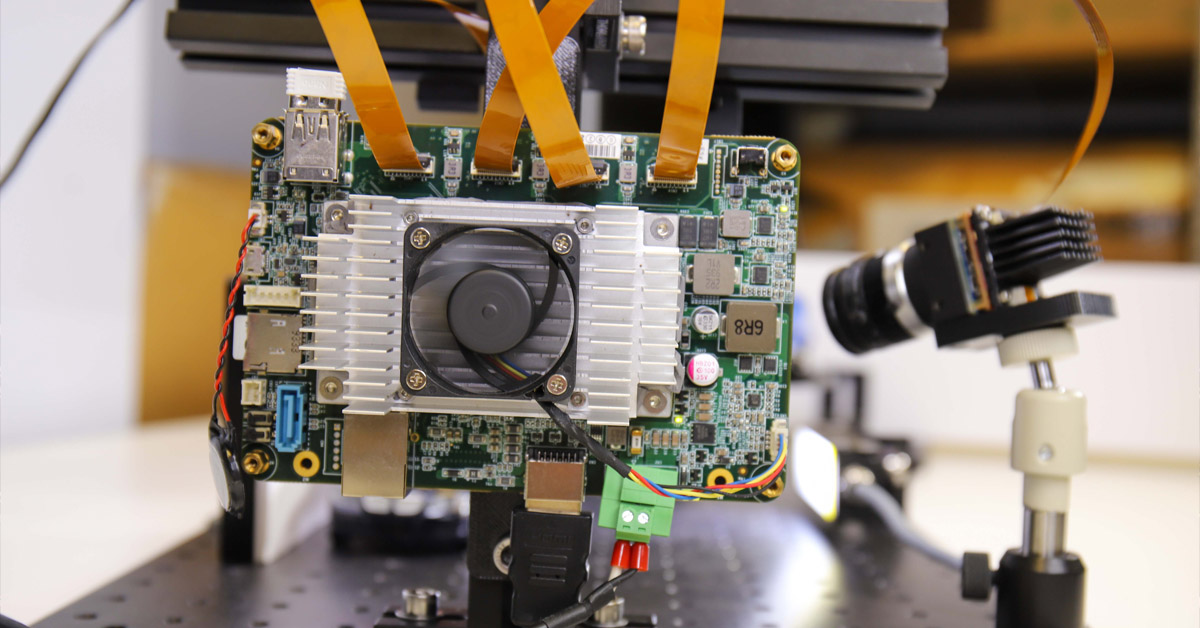

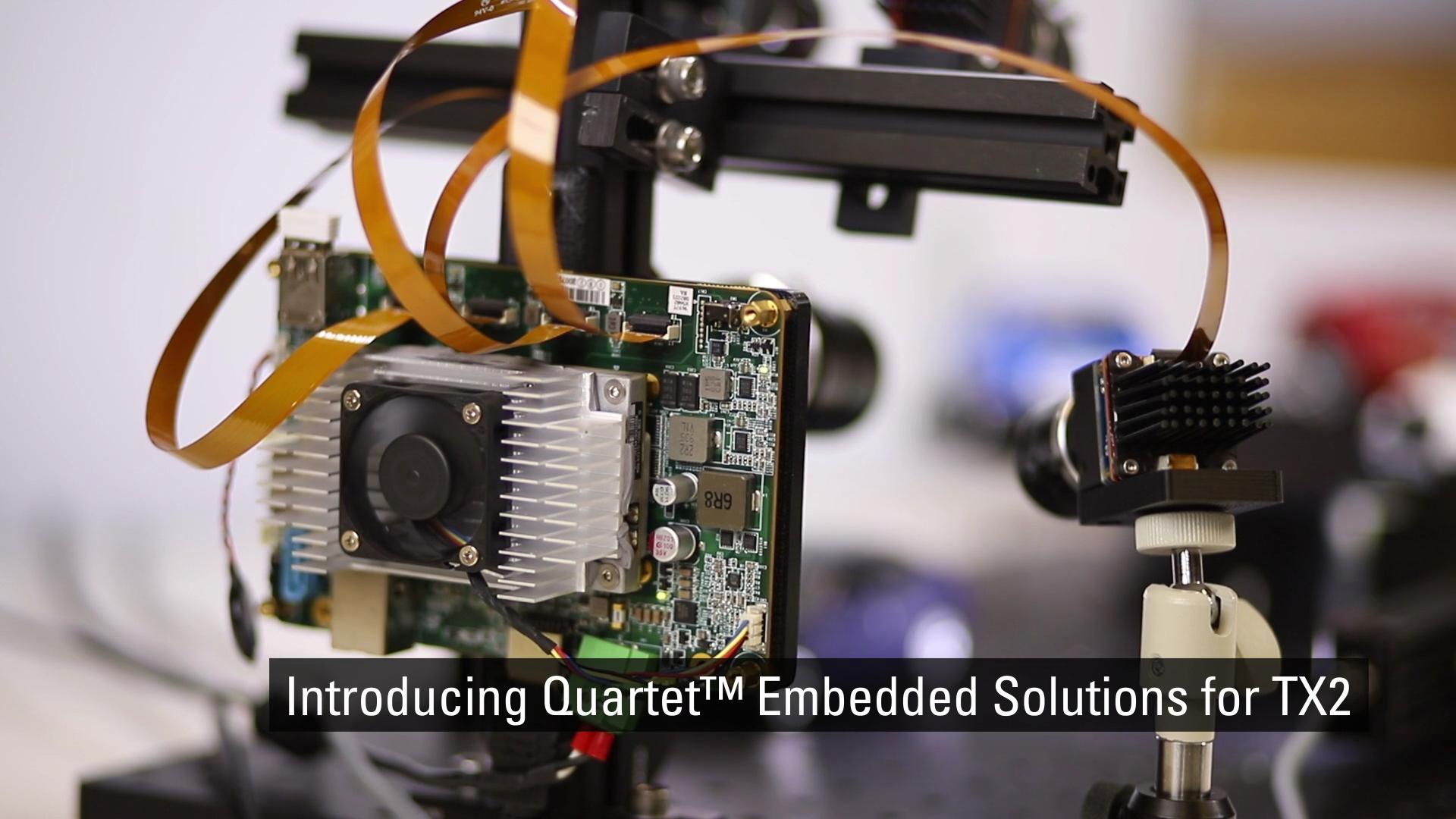

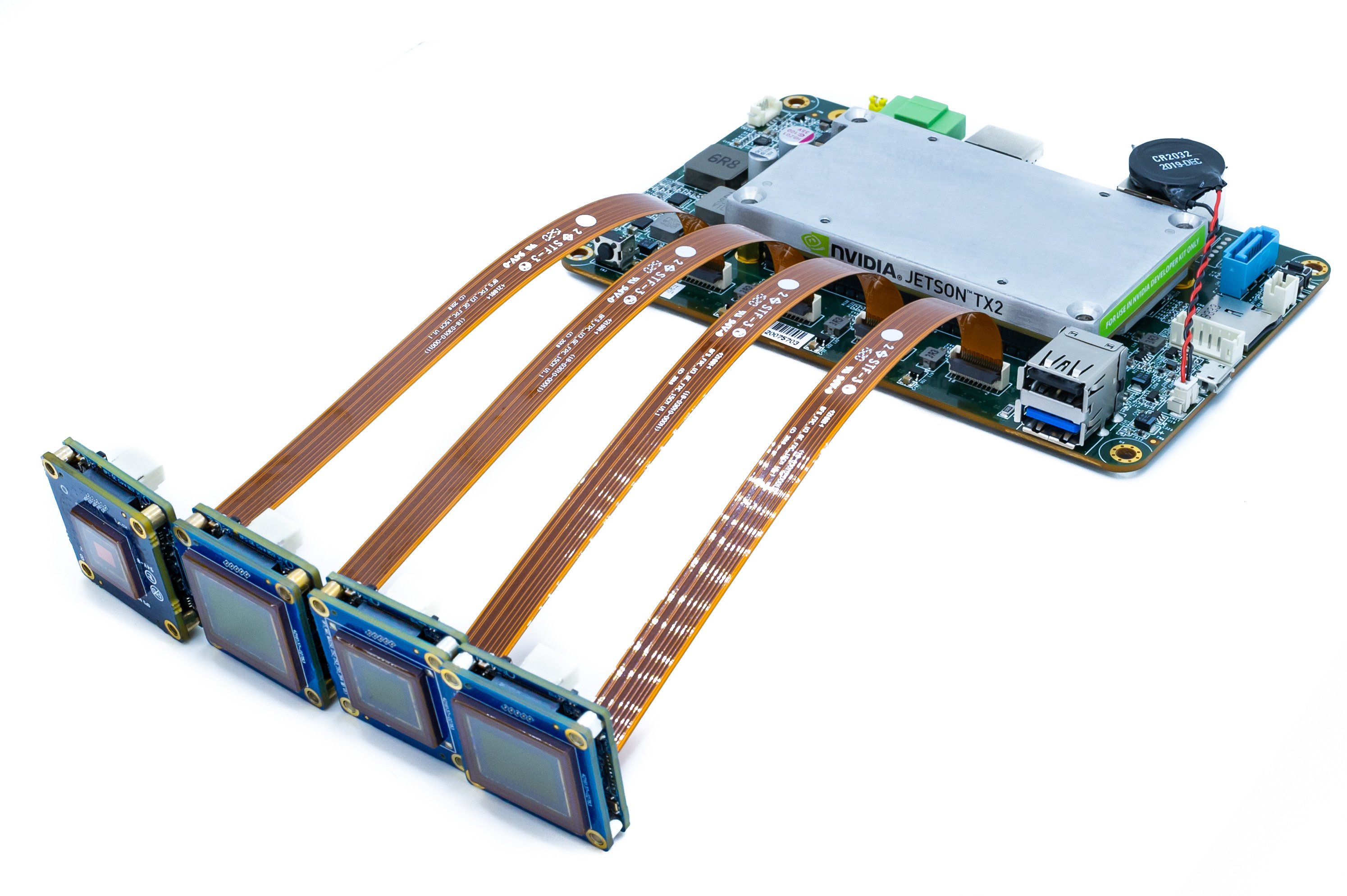

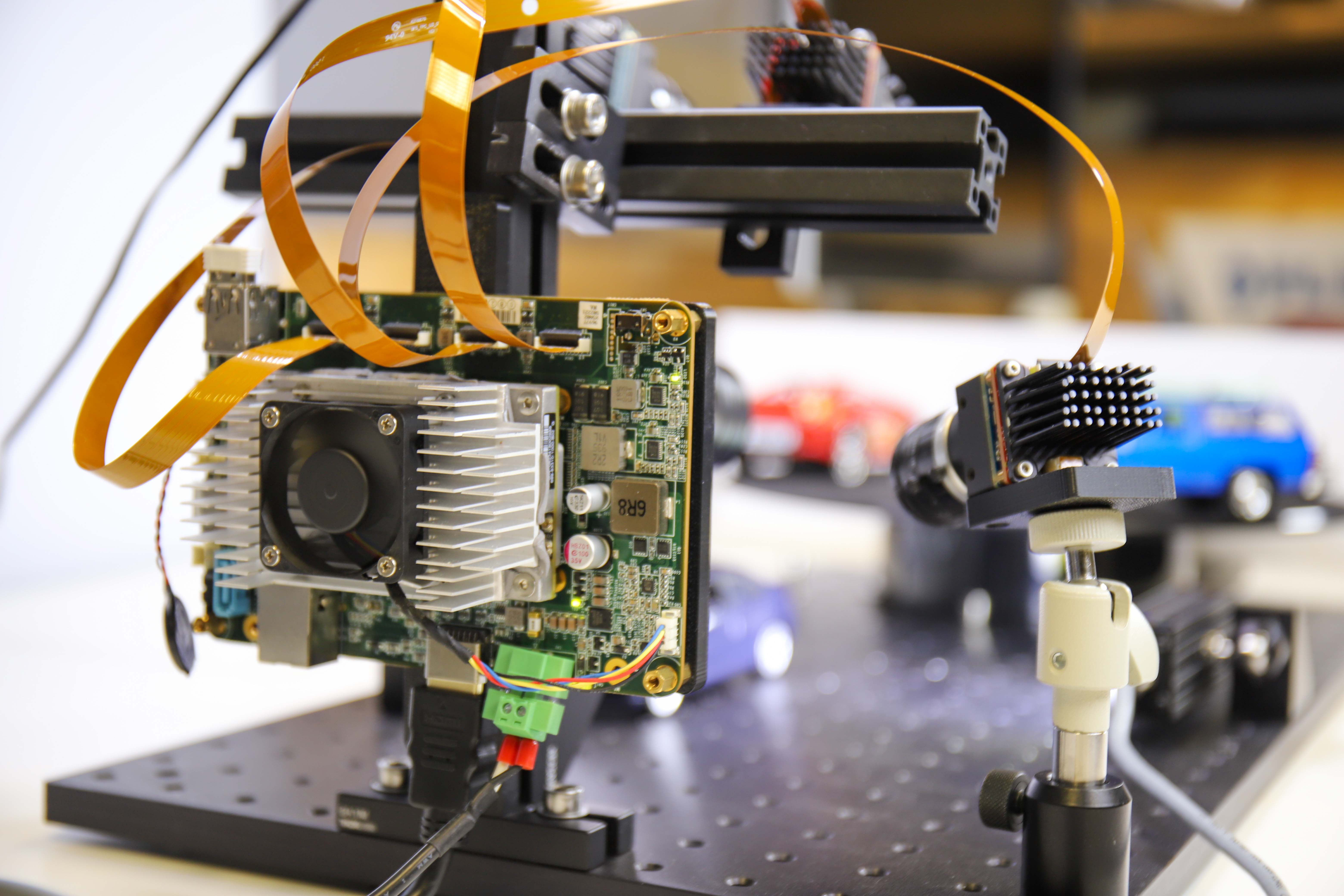

Figure 1: Prototype setup for all four applications

In this very practical article, to highlight what the Quartet can enable, we describe steps taken in developing an ITS (traffic systems) inspired prototype running four simultaneous applications, three of which use deep learning:

- Application 1: License plate recognition using deep learning

- Application 2: Vehicle type categorization using deep learning

- Application 3: Vehicle color classification using deep learning

- Application 4: See through windshield (past reflection & glare)

Shopping list: Hardware & Software Components

1) SOM for processing:

New Teledyne FLIR Quartet carrier board for TX2 includes:

- 4x TF38 connectors with dedicated USB3 controllers

- Nvidia Jetson TX2 module

- Pre-installed with Teledyne FLIR’s powerful and easy-to-use Spinnaker SDK to ensure plug and play compatibility with Teledyne FLIR Blackfly S board level cameras

- Nvidia Jetson deep learning hardware accelerator allows for complete decision-making systems on a single compact board

Figure 2: Quartet Embedded Solution with TX2 pictured with 4 Blackfly S cameras and 4 FPC cables.

2) Cameras & Cables

- 3x standard Teledyne FLIR Blackfly S USB3 board level cameras utilizing the same rich feature set as the cased version applied to the latest CMOS sensors and for seamless integration with Quartet

- 1x custom camera: Blackfly S USB3 board level camera with Sony IMX250MZR polarized sensor

- Cables: TF38 FPC cables allowing power and data to be transmitted over a single cable to save on space

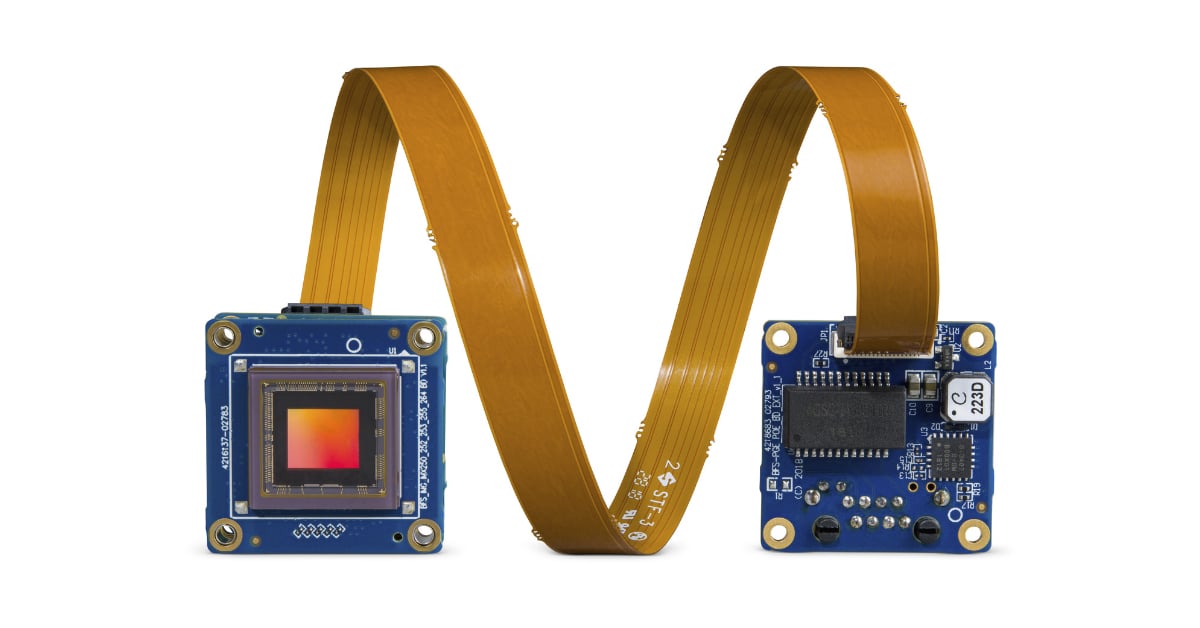

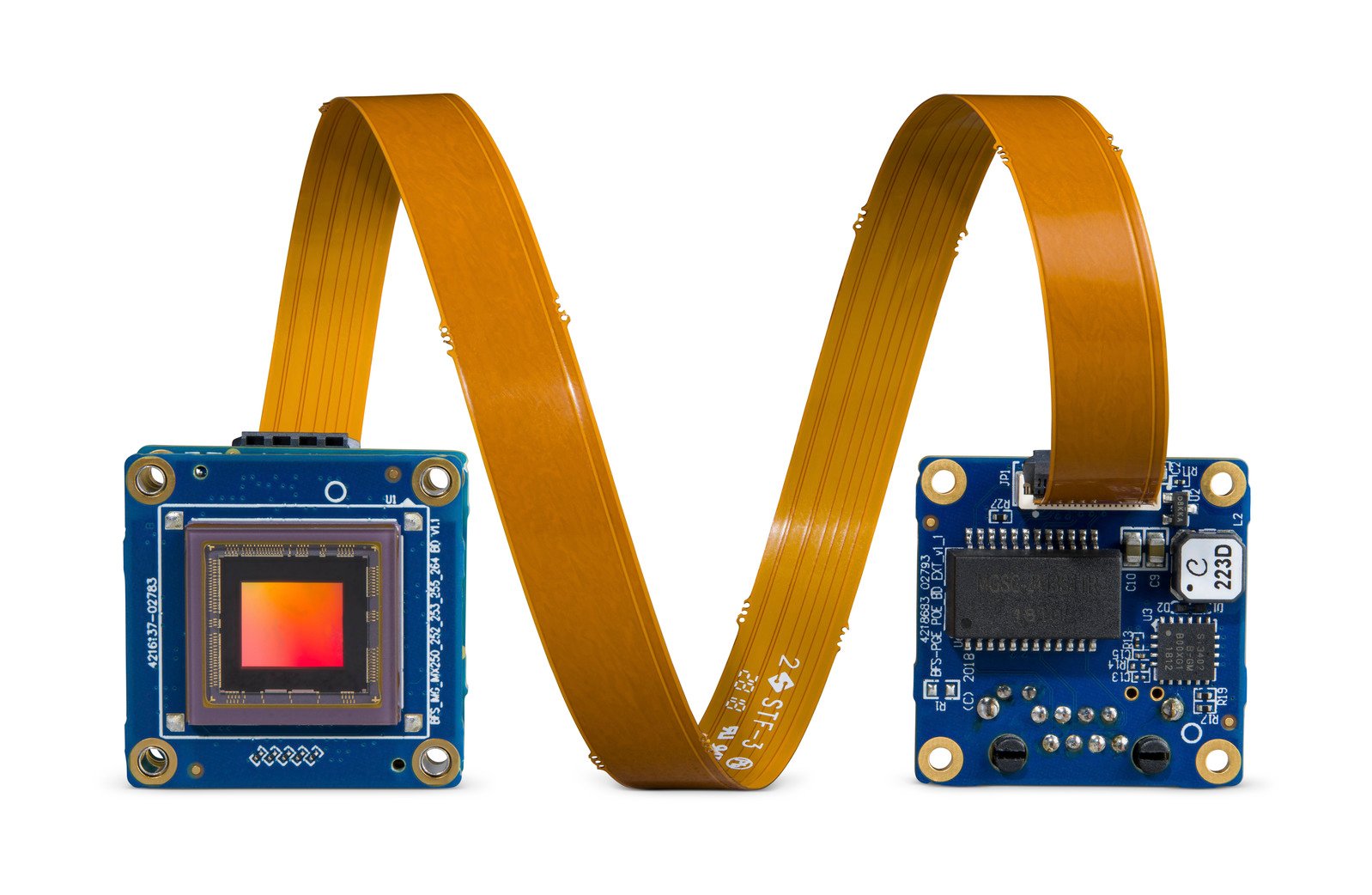

Figure 3: Blackfly S Board Level camera with FPC cable

Abonnez-vous pour voir d’autres articles similaires

3) Lighting: LED lights to provide sufficient illumination to avoid motion blur for the license plates.

Application 1: License plate recognition using deep learning

Development time: 2-3 weeks primarily to make it more robust and run faster

Training Images: Included with LPDNet

For license plate recognition, we deployed an off-the-shelf License Plate Detection (LPDNet) deep learning model from Nvidia to detect the location the license plates. To recognize the letter & numbers we used the Tesseract open-source OCR engine. The camera is a Blackfly S board level 8.9 MP color camera (BFS-U3-88S6C-BD) with the Sony IMX267 sensor. We limited the region of interest for license plate detection to speed up performance and applied tracking to improve the robustness. The output includes bounding boxes of the license plates together with the corresponding license plate characters.

Figure 4: Streaming with bounding boxes of the license plates and license plate characters.

Application 2: Vehicle type categorization using deep learning

Development time: ~12 hours including image collection and annotation

Training Images: ~300

For vehicle type categorization, using transfer learning, we trained our own Deep Learning object detection model for the three toy cars used, namely SUV, sedan and truck. We collected approximately 300 training images of the setup, taken at various distances and angles. The camera is a Blackfly S board level 5 MP color camera (BFS-U3-51S5C-BD) with the Sony IMX250 sensor. We annotated the bounding boxes of the toy cars which took approximately 3 hours. We performed transfer learning to train our own SSD MobileNet object detection model which took around half a day on an Nvidia GTX1080 Ti GPU. With the GPU hardware accelerator, the Jetson TX2 module can perform deep learning inference efficiently and output bounding boxes of the cars together with the corresponding vehicle types.

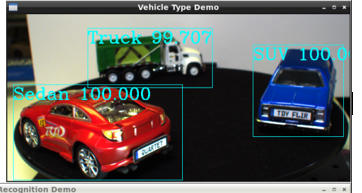

Figure 5: Streaming with bounding boxes and pre-set vehicle types & confidence factor identified

Application 3: Vehicle color classification using deep learning

Development time: Re-used model from “Vehicle Type application” with additional 2 days to classify color, integrate & test

Training Images: Re-used same 300 images as “Vehicle Type application”

For vehicle color classification, we ran the same deep learning object detection model as above to detect the cars, followed by image analysis on the bounding boxes to classify its color. The output includes bounding boxes of the cars together with the corresponding vehicle colors. The camera is a Blackfly S board level 3 MP color camera (BFS-U3-32S4C-BD) with the Sony IMX252 sensor.

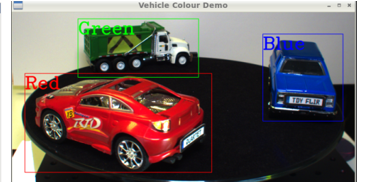

Figure 6: Streaming with bounding boxes and pre-set color types identified

Application 4: See through windshield (past reflection & glare)

Glare reduction is critical for traffic related applications such as seeing through a windshield to monitor HOV lanes, check for seatbelt compliance and even check for using their phones while driving. For this purpose, we made a custom camera by combining a Blackfly S USB3 board level camera with the 5MP polarization Sony IMX250MZR sensor. This board level polarization camera is not a standard product, but Teledyne FLIR is able to swap in different sensors easily to offer custom camera options to showcase its glare removal functionality. We simply streamed the camera images via Teledyne FLIR’s SpinView GUI, which offers various "Polarization Algorithm" options such as quad mode, glare reduction mode, to show the glare reduction on a stationary toy car.

Figure 7: Spinnaker SDK GUI offers various "Polarization Algorithm" options such as quad mode, glare reduction mode, to show the glare reduction on a stationary toy car. The quad mode shows the 4 images corresponding to the four different polarization angles.

Overall System Optimization

While each of the four prototypes worked well independently, we noticed that the overall performance was quite poor when all the deep learning models were running simultaneously. Nvidia’s TensorRT SDK provides a deep learning inference optimizer and runtime for Nvidia hardware such as the Jetson TX2 module. We optimized our deep learning models using the TensorRT SDK, resulting in around 10x performance improvement. On the hardware side, we attached a heat sink onto the TX2 module to avoid overheating, as it was quite hot with all the applications running. At the end, we managed to achieve good frame rates with all four applications running together: 14 fps for vehicle type identification, 9 fps for vehicle color classification, 4 FPS for automatic number plate recognition and 8 FPS for the polarization camera.

We developed this prototype within a relatively short period of time thanks to the ease-of-use and reliability of the Quartet Embedded Solution and Blackfly S board level cameras. The TX2 module with pre-installed Spinnaker SDK ensures plug & play compatibility with all the Blackfly S board level cameras which can stream reliably at full USB3 bandwidth via the TF38 connection. Nvidia provides many tools to facilitate development and optimization on the TX2 module. The Quartet is now available for purchase online on flir.com as well as through our offices and global distributor network.