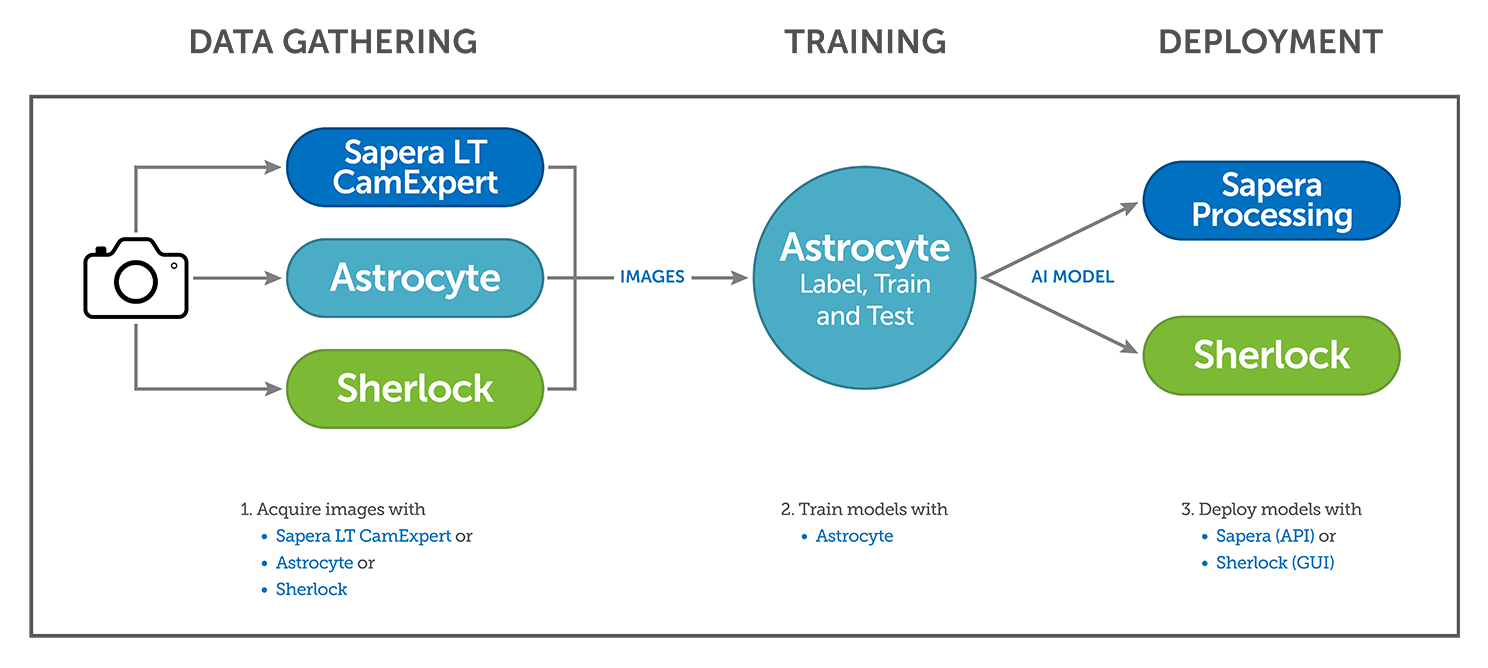

Teledyne DALSA Astrocyte™ is a code-free AI training tool to quickly deploy AI models for machine vision solutions. Astrocyte empowers users to harness their own images of products, samples, and defects to train neural networks to perform a variety of tasks such as anomaly detection, classification, object detection and segmentation. With its highly flexible graphical user interface, Astrocyte allows visualizing and interpreting models for performance/accuracy as well as exporting these models to files that are ready for runtime in Teledyne DALSA Sapera™ Processing and Sherlock™ vision software platforms.

Why AI?

Traditional Machine Vision Tools

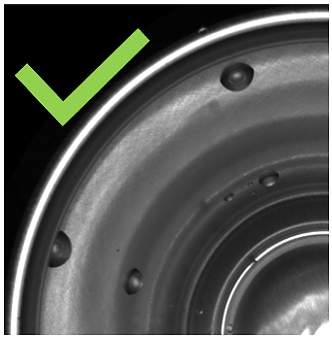

✗ Confuse water droplets and surface damage

✗ Can’t handle shade and perspective changes

✗ Miss subtle surface damage

AI Classification Algorithim

✓ Ignores water droplets

✓ Is robust to variations in surface finish and perspective

✓ Can detect a full range of defects

No AI Expertise or Code Required

- Train a model without having to learn about any AI parameters

- Simply choose one of three tuning depth options (low, medium, high), and easily get an optimized AI model

- Graphical user interface for rapid machine vision development

- Train AI models quickly (under 10 minutes with good data)

Save Time Labelling Images and Training

- Continual learning allows for updating of classification models on the factory floor without retraining. This saves hours of time updating models.

- Automatic labelling (Via SSOD or pretrained models) generates bounding boxes and labels

- Import existing label sets (.txt, PASCAL VOC, MS COCO, KITTI))

- Segmentation annotation with different shapes (polygon, rectangle, circle, torus tools)

- Decrease training effort with pre-trained AI models (less samples required)

Key Features

- Graphical User Interface for rapid machine vision application development.

- Automatic tuning of training hyperparameters for maximum ease-of-use for non-experts in AI (also a manual mode for experts).

- Automatic generation of annotations via pre-trained models or semi-supervised training.

- Masking of regions to exclude from inspection via ROI markers of multiple shapes.

- Highly accelerated inference engine for optimal runtime speed on either GPU or CPU.

- Training on multiple GPUs to reduce training time.

- Continual Learning (aka Lifelong Learning) in classification for further learning at runtime.

- Object detection at an angle (aka rotated bounding boxes).

- Location of small defects in high-resolution images via tiling mechanism.

- Easy integration with Sapera Processing and Sherlock vision software for runtime inference.

- Assess AI models via visual tools such as heatmaps, loss function curves, confusion matrix.

- Full image data privacy – train and deploy AI models on local PC.

Graphical interfaces in Sapera and Astrocyce software make it esier to implement your own deep learning network. Learn more from this article.

Combine with other Teledyne Components

- Leverage Teledyne’s Sherlock or Sapera Processing to get the best of rule-based algorithms combined with AI models for a full solution

- Live video acquisition from Teledyne and third-party cameras

Application Examples

Astrocyte Inspections in the Recycling Industry

- Plastic particles, such as from beverage bottles, undergo a multiple-step recycling and inspection process before they are reused in new plastic products.

- Astrocyte is used to locate and classify more than 30 types of materials with high accuracy to ensure high quality material with less than 10 parts per million of contaminants.

Article Originally published in Vision System Design: Tell Me More

Astrocyte Improves Quality & Efficiency in Medical Imaging

- The tiny and random nature of fibers on X-ray detectors make them challenging and time consuming for traditional methods or humans.

- With Astrocyte, the X-ray solutions team was able to rapidly identify all defects and even outperform their operators.

Article Originally published in Novus Light: Tell Me More

Inspection of Screw Threads

Classification of good and bad screw tips. Subtle defects on screw threads are detected and classified as bad samples. Astrocyte detects small defects on high resolution images of reflective surface. Just a few tens of samples are required to train a good accuracy model. Classification is used when good and bad samples are available, while Anomaly Detection is used when only good samples are available.

Location/Identification of Wood Knots

Localization and classification of various types of knots in wood planks. Astrocyte can robustly locate and classify small knots 10-pixels wide in high-resolution images of 2800 x 1024 using the tiling mechanism which preserves native resolution.

Surface Inspection of Metal Plates

Detection and segmentation of various types of defects (such as scratches and fingerprints) on brushed metal plates. Astrocyte provides output shapes where each pixel is assigned a class. Usage of blob tool on the segmentation output allows performing shape analysis on the defects.

Deep Learning Architectures

Astrocyte Supports the Following Deep Learning Architectures:

Classification

Description

A generic classifier to identify the class of an image.

Typical Usage

Use in applications where multiple class identification is required. For example, it can be used to identify several classes of defects in industrial inspection. It can train in the field using continual learning.

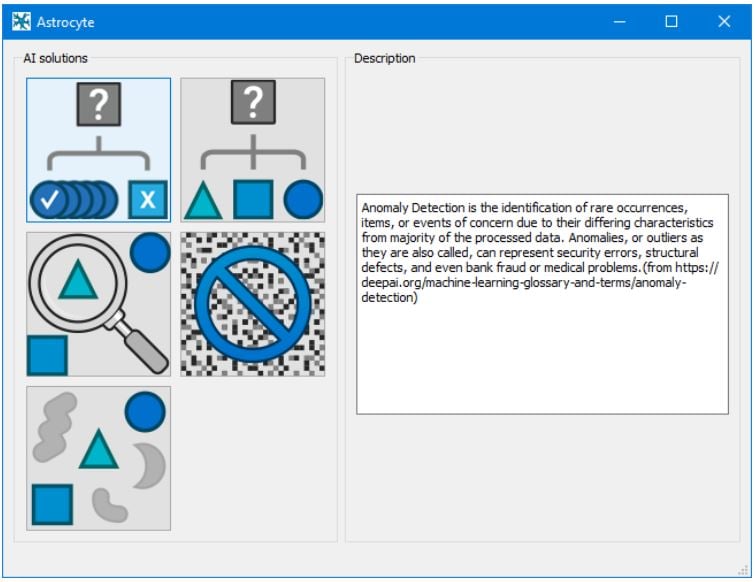

Anomaly Detection

Description

A binary classifier (good/bad) trained on “good” images only.

Typical Usage

Use in defect inspection where simply finding defects is sufficient (no need to classify defects). Useful on imbalanced datasets where many “good” images and a few “bad” images are available. Does not require manual graphical annotations and very practical on large datasets.

Object Detection

Description

An all-in-one localizer and classifier. Object detection finds the location and the orientation of an object in an image and classifies it.

Typical Usage

Use in applications where the position and orientation of objects is important. For example, it can be used to provide the location and class of defects in industrial inspection.

Segmentation

Description

A pixel-wise classifier. Segmentation associates each image pixel with a class. Connected pixels of the same class create identifiable regions in the image.

Typical Usage

Use in applications where the size and/or shape of objects are required. For example, it can be used to provide location, class, and shape of defects in industrial inspection.

Astrocyte GUI

Creating Dataset

Generating Image Samples

- Connect to a camera(Teledyne or 3rd party) or a frame-grabber to acquire live video

- Save images while acquiring live video stream (manually from click or automatic)

Importing Image Samples

- File selection based on folder layout, prefix/suffix and regular expressions.

- Image file formats: PNG, JPG, BMP, GIF and TIFF.

- Image pixel formats: Monochrome 8-16 bits, RGB 24 and 32-bit.

- Automatic (random) or manual distribution of images into training and validation datasets.

- Adjustable image size for optimizing memory usage.

- Creation of a mask via visual editing tools to mark portions of the image to be excluded.

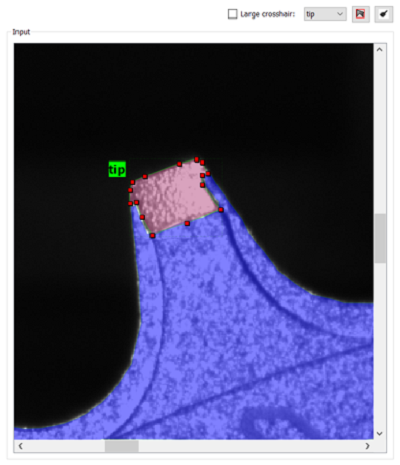

Importing/Creating Annotations

- Manually create annotations with built-in visual editing tools: rectangle, circle, polygon, brush, …

- Automatically create annotations using pre-built models.

- Automatically create annotations using Semi-Supervised Object Detection (SSOD) applied to a partially annotated dataset.

- Import annotations from user-defined text files with customizable parsing scheme.

- Import annotations from common database formats such as Pascal VOC, MS COCO and KITTI.

Visualizing/Editing/Handling Dataset

- Image display and zoom.

- Annotation display as overlay graphics on image.

- Annotation selection, deletion and editing.

- Manual editing of annotations on individual samples.

- Merging of two dataset.

- Exporting dataset to file.

Training Model

Training on System GPU (minimum requirements below):

- Selection of device (when multiple devices available)

- Choice of deep learning models for optimal accuracy.

- Selection of preprocessing level: native, scaling or tiling.

- Support of rectangular input images (preserving aspect ratio)

- Access to hyperparameters such as learning rate, number of epochs, batch size, etc., for customization of training execution.

- Hyperparameters pre-set with default values commonly used.

- Image augmentation available for artificially increasing the number of training samples via transformations such as rotation, warping, lighting, zoom, etc.

- Training session cancelling and resuming.

- Progress bar with training duration estimation.

- Graph display of progress including accuracy and training loss at each iteration (epoch).

- Automatic or manual setting of hyperparameters.

Model Validation

- Statistics on model training.

- Metrics on model performance: accuracy, recall, mean average precision (mAP), intersection over union (IoU).

- Model testing interface to perform validation of the model on either training, validation, entire, or user-defined dataset with possibility of reshuffling samples.

- Display of confusion matrix (graph showing intersection between prediction and ground truth). Interactive selection of individual images.

- Display of heatmaps for visualization of hot regions in classification.

- Inference on sample images for testing inside Astrocyte.

Model Export-Import

- Proprietary model format compatible with Sapera Processing and Sherlock†.

- Model contains all information required for performing inference: model architecture, trained weights, metadata such as image size and format.

- Multiple model management. Models stored in Astrocyte internal storage.

- Model can be imported into user application via Sapera Processing or Sherlock†.

Integration with Sapera Processing and Sherlock

- Both Sapera Processing and Sherlock include an inference tool for each of the supported model architectures.

- Model files are imported into the inference tool and ready for execution on live video stream.

- The inference tool can be coupled with other image processing tools such as blob analysis, pattern matching, barcode reading, etc.

- To be used in conjunction with Sapera LT or Spinnaker for acquiring images from Teledyne DALSA cameras and frame-grabbers.

- Examples available with source code.

Benchmarks

|

MODEL |

INFERENCE TIME (ms) |

|||||||

|

Module |

Dataset |

Image Size |

Input Size |

RTX 3070 |

RTX 3090 |

RTX 4090 |

Intel CPU |

AMD CPU |

|

Anomaly Detection |

Metal |

2592 x 2048 x 1 |

1024 x 1024 x 1 |

21.0 |

13.0 |

9.0 |

275 |

645 |

|

Classification |

Screw |

768 x 512 x 1 |

768 x 512 x 1 |

3.1 |

2.2 |

1.2 |

31.9 |

41.3 |

|

Object Detection |

Hardware |

1228 x 920 x 3 |

512 x 512 x 3 |

3.8 |

3.2 |

3.0 |

31.7 |

46.3 |

|

Segmentation |

Scratches |

2048 x 2048 x 1 |

1024 x 1024 x 1 |

22.3 |

16.6 |

8.9 |

222 |

391 |

|

Module: AI model type |

Intel CPU: Intel Core-i9 12900K @ 3.2GHz |

System Requirements

Sapera Processing (Inference)

Operating System:

- Windows 10 or 11 (64-bit)

CPU:

- Intel® Processor w/ EM64T technology

GPU:

- With minimum 6GB RAM (recommended)

- With minimum Compute Capability 5.2 (equivalent to GTX 900 series)

- With graphics driver version 516.31 or later

- An NVIDIA GPU for higher speed (Optional)

GPU Recommendations:

- RTX 3000 and 4000 series

Astrocyte (Training)

Operating System:

- Windows 10 or 11 (64-bit)

CPU:

- Intel® Processor w/ EM64T technology Minimum 16GB RAM (32 GB ideal)

GPU:

An NVIDIA GPU

- With minimum 8GB of RAM

- With minimum Compute Capability 5.2 (equivalent to GTX 900 series)

- With graphics driver version 516.31 or later

GPU Recommendations:

Good:

- RTX 3070/4070 or any other card with 8GB RAM

Very Good:

- RTX 3080/4080 or any other card with 12GB RAM

Best:

- RTX 3090/4090 or any other card with 24GB RAM

Specifications

- Type

- AI Model Generating Tool

- Supported Languages

- N/A

- Image Processing

- Generating AI Models for Anomaly Detection, Classification, Object Detection, Segmentation

- Compilers Supported

- N/A

- Camera/Frame Grabber Interface Supported

- N/A

- Processor

- Intel/AMD, GPU

Resources & Support

You must be logged in to download software or firmware. Please sign in or create an account here.

Astrocyte AI Trainer